Ensuring a Smooth Entry: Your Complete Indian Visa Handbook

Understanding Missing Data

Accurate data is crucial for your Indian visa application process. Any missing information can delay or invalidate your submission. Here, you’ll understand different types of missing data and how they are represented.

Types of Missing Data

Missing data in your application can occur for various reasons. Understanding these types helps in choosing the right strategy for handling them.

- Missing Completely At Random (MCAR): Data is missing with no specific pattern or relation to any other data.

- Missing at Random (MAR): Data is missing but the missingness is related to some other observed data.

- Missing Not At Random (MNAR): Data is missing due to factors related to the missing data itself.

Reasons for missing data can range from incomplete forms, internet connection errors, to user oversight. Each type requires specific handling to ensure your process remains smooth. For more details on visa types, see types of Indian visas and Indian visa for US citizens.

Representation of Missing Values

Missing values in a dataset can be marked in different ways. These representations depend on the data source and conventions.

| Data Source | Representation |

|---|---|

| Application Forms | Blank Fields |

| Online Forms | “null” |

| Database Entries | Null, NaN, -99, N/A |

Properly identifying these missing values ensures accurate data interpretation and correction. Whether applying for an Indian tourist visa, Indian business visa, or any other Indian visa, ensure your data is complete to avoid delays.

For additional help, refer to our Indian visa helpline.

Understanding the types and representation of missing data is essential to ensure your Indian visa process goes smoothly. Failing to correctly handle missing or incorrect data may lead to application rejection or delays. Explore further on our site for detailed information on Indian visa requirements and Indian visa processing time.

Strategies for Handling Missing Data

When managing your Indian visa application, it’s important to ensure that all required information is accurately filled. However, there are times when certain data might be missing. This section will explore two common strategies for handling missing data: deleting missing values and imputing missing values.

Deleting Missing Values

Deleting missing values can be a straightforward method to handle incomplete data, but it’s crucial to understand the potential loss of valuable information that comes with this technique.

Deleting Columns

If a column in your visa database has a significant number of missing values, it may be practical to delete the entire column. However, consider the information that may be lost by doing so (Handling missing values).

| Action | Pros | Cons |

|---|---|---|

| Deleting the column | Simplifies the dataset | Can result in loss of important information |

| Example Usage | Applicant’s optional middle name in a visa form | Removal may not impact essential visa application data |

Deleting Rows

Another approach is to delete rows with missing values. This method might be easy but can be drastic as it removes the entire row even if it only has one missing value (Handling missing values).

| Action | Pros | Cons |

|---|---|---|

| Deleting the row | Ensures no missing data in remaining dataset | Loss of potentially important data |

| Example Usage | Entries with incomplete addresses | All related information for the applicant is lost |

Imputing Missing Values

Imputation involves replacing missing data with substituted values. This method maintains the overall integrity of the dataset while addressing gaps. Choosing the appropriate imputation technique can help avoid bias and ensure you make accurate decisions (DataCamp).

| Imputation Technique | Use Case | Description |

|---|---|---|

| Mean/Median/Mode | Numerical and categorical data | Replaces missing values with the mean, median, or mode of the column (Analytics Vidhya) |

| Forward Fill/Backward Fill | Time-series or ordered data | Uses the last known value to fill forward or backward |

Example Application

For your Indian tourist visa, you can use imputation methods to handle missing data effectively, ensuring that your application process is smooth and complete.

- Mean Imputation: Replacing the average value of incomplete financial statements in visa forms.

- Forward Fill: Using the last provided address to fill in subsequent missing address entries.

Understanding and correctly handling missing data is essential to ensure a smooth visa application process and maintaining the data integrity crucial for visa approval.

Techniques for Imputing Missing Data

When dealing with missing data in your dataset, there are several techniques you can use to impute, or replace, these missing values. Here, we will discuss two commonly used methods: Mean/Median/Mode Imputation and Forward Fill and Backward Fill.

Mean/Median/Mode Imputation

Imputing missing data using the mean, median, or mode is a straightforward and widely used method. This approach involves replacing the missing values in a dataset with the mean, median, or mode of the available values.

- Mean Imputation: This method is best used for numerical data. It involves replacing the missing values with the average (mean) value of the available data.

- Median Imputation: Suitable for skewed distributions, this method replaces the missing values with the median value of the dataset.

- Mode Imputation: This method is used for categorical data or data with repeated measures. It replaces missing values with the most frequently occurring value (mode) in the dataset.

| Method | Suitable for | How it works |

|---|---|---|

| Mean Imputation | Numerical | Replaces missing values with the mean of available values |

| Median Imputation | Skewed Numerical | Replaces missing values with the median of available values |

| Mode Imputation | Categorical | Replaces missing values with the mode of available values |

For example, if you have a dataset where the ‘Age’ column has missing values:

mean_age = df['Age'].mean()

df['Age'].fillna(mean_age, inplace=True)

Understanding how to handle missing data is crucial for maintaining data integrity. For more advanced methods, you can refer to our section on Advanced Imputation Methods.

Forward Fill and Backward Fill

Forward Fill and Backward Fill are methods commonly used for time series data. These techniques are especially useful when you believe that the previous or next values are relevant substitutes for the missing data.

- Forward Fill: This method fills the missing values with the last observed value.

- Backward Fill: This method fills the missing values with the next observed value.

| Method | Use Case | How it works |

|---|---|---|

| Forward Fill | Time Series | Fills missing values with the last observed value |

| Backward Fill | Time Series | Fills missing values with the next observed value |

For instance, if you are dealing with a dataset where the ‘Temperature’ column has missing values:

df['Temperature'].fillna(method='ffill', inplace=True) # Forward Fill

df['Temperature'].fillna(method='bfill', inplace=True) # Backward Fill

These methods preserve the trend and pattern of the series, leading to a more realistic dataset. For further insights into data handling, visit our resources on best practices for database design.

Choosing the right method for imputing missing data depends on your specific dataset and the nature of the missing values. By understanding both basic and advanced imputation techniques, you can ensure your data remains robust and reliable. For more tips on maintaining data integrity, refer to the importance of data integrity rules.

Advanced Imputation Methods

In certain cases, basic imputation techniques like mean, median, or mode imputation aren’t sufficient. Advanced methods such as random sampling and machine learning models provide more sophisticated ways to handle missing data.

Random Sampling

Random sampling involves replacing missing values with randomly selected elements from the same column. This method is particularly useful for categorical data. The main advantage is that it preserves the original distribution and variability in the data.

To implement random sampling:

- Identify the missing values.

- Replace each null value with a randomly chosen value from the non-missing elements of the same column.

Here’s a table comparing random sampling with other basic imputation methods:

| Method | Type of Data | Pros | Cons |

|---|---|---|---|

| Mean/Median/Mode | Numerical | Simple and fast | Can introduce bias |

| Forward/Backward Fill | Categorical | Maintains sequence | May not retain actual data distribution |

| Random Sampling | Categorical | Preserves original data distribution | Can still introduce some randomness error |

For detailed instructions on managing missing values, see our guide on the types of Indian visas.

Using Machine Learning Models

Machine learning models offer a powerful approach for imputing missing data. Models such as Linear Regression or K-Nearest Neighbors (KNN) can predict missing values based on patterns learned from existing data. This approach is more accurate and reliable, especially when the missing data isn’t random.

Steps to use machine learning models for imputation:

- Split the data into sets with and without null values.

- Fit the model on the set without null values.

- Predict the missing values using the trained model.

- Combine the dataset with the imputed values.

For example, using the SimpleImputer from the scikit-learn library, you can replace null values by fitting and transforming the imputer on the dataset (Medium).

Here’s a comparison of different machine learning models for imputation:

| Model | Use Case | Pros | Cons |

|---|---|---|---|

| Linear Regression | Numerical | Simple implementation | Assumes a linear relationship |

| K-Nearest Neighbors | Numerical/Categorical | Uses similarity to fill gaps | Computationally intensive for large datasets |

| Random Forest | Numerical/Categorical | Handles a large variety of data types | Requires ample computational resources |

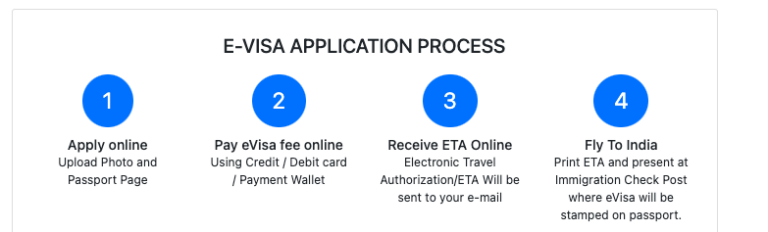

For more on effective data handling, take a look at the Indian visa application process.

Choosing the right imputation strategy, whether it be basic or advanced, is crucial to maintaining the integrity of your data. Ensure you assess the type of missing data and choose an appropriate method to avoid introducing bias (DataCamp).

Advance through these methods to make informed decisions for handling missing data, keeping your data accurate and reliable. For more on maintaining data integrity, refer to best practices in database design.

Importance of Data Integrity

Ensuring data integrity is vital for maintaining a reliable and accurate database. Implementing best practices not only safeguards your data but also enhances its credibility and usability.

Enforcing Data Integrity Rules

One of the first steps in enforcing data integrity is to implement business rules within your database design. These rules ensure that the data entered into the system adheres to predefined standards and constraints. SQL facilities are often utilized for this purpose, covering aspects such as nullability, string length, and foreign key assignments (Simple Talk).

Here are some common business rules you can implement:

- Nullability: Define whether a column can have null values.

- String Length: Set maximum and minimum lengths for strings.

- Foreign Keys: Ensure foreign key constraints to maintain referential integrity between tables.

Best Practices for Database Design

Adopting best practices in your database design helps to avoid common pitfalls and ensures a robust and efficient database structure.

Use of Stored Procedures

Stored procedures are highly recommended due to their various benefits. They enhance maintainability, encapsulation, security, and performance, making collaboration between database and functional programmers more effective (Simple Talk).

| Feature | Benefits |

|---|---|

| Maintainability | Easier updates and debugging |

| Encapsulation | Encapsulates business logic |

| Security | Protects against SQL injection |

| Performance | Optimized execution plans |

Thorough Testing

Testing is often overlooked in database development, leading to significant issues in production, including performance-related problems. Thorough testing during development helps to identify and rectify bugs early on (Simple Talk).

For more tips on ensuring data accuracy and maintaining database efficiency, you can refer to our articles on indian visa on arrival and indian visa processing time.

By enforcing data integrity rules and following best practices, you can create a reliable and efficient database system that supports accurate and easy data management. For additional guidance on specific use cases, see our resources on indian visa application process and indian visa documents checklist.

Common Database Design Mistakes

Creating a robust database system is essential to ensuring smooth visa application and processing. Avoiding common mistakes can save you from potential headaches. Here are two critical areas to focus on: inadequate testing in development and avoiding generic T-SQL objects.

Inadequate Testing in Development

Testing is a fundamental part of database design that should never be overlooked. Neglecting thorough testing can lead to significant bugs during production, which can impact performance (Simple Talk). Proper testing helps identify issues early, when they are easier and less costly to fix.

Key Testing Practices:

- Unit Testing: Ensure individual components of the database function correctly.

- Integration Testing: Validate that different modules or services work well together.

- Performance Testing: Check the system’s responsiveness and stability under various loads.

For a smoother visa application process, especially for complex visas like the Indian business visa, ensuring your database is thoroughly tested is crucial. Poorly tested environments can lead to delays, affecting tourists applying for visas.

Avoiding Generic T-SQL Objects

Using generic Transact-SQL (T-SQL) objects to handle multiple tables may seem efficient but can be detrimental to performance and effective database design. Generic objects can complicate maintenance and often lead to inefficient queries (Simple Talk).

Why Avoid Generic T-SQL Objects:

- Performance Issues: Generic objects can slow down database operations.

- Maintenance Complexity: More difficult to update and debug.

- Security Risks: Harder to enforce granular permissions.

Instead, consider using stored procedures, which offer better maintainability, encapsulation, security, and performance. This approach allows for clearer collaboration between database developers and functional programmers, ensuring your system is robust (Simple Talk).

Using a well-designed database system helps facilitate smoother processing of applications, whether you’re dealing with an Indian tourist visa or more specific types like an Indian medical visa. Avoiding these common mistakes ensures efficiency and reliability in your visa handling processes.